Lower your internet bill

61% of people overpay for their internet.

Are you one of them?

Unlock exclusive offers in your area!

Call now

[tel]Enter zip code

1 Star is Poor & 5 Stars is Excellent.

* Required

Written by Rosslyn Elliott - Pub. Apr 11, 2024 / Updated Apr 16, 2024

Table of Contents

About the author

On March 29, 2024, the FTC denied an application from the Entertainment Software Rating Board (ESRB) to use facial analysis scanning for age verification. The proposed technology is related to online gaming and would validate parental consent for gamers under 13 years old.

The FTC denied the request without prejudice, which means that the ESRB and its co-applicants, Yoti Ltd. and SuperAwesome Ltd., may refile the application in the future.

The ESRB is a gaming industry-based group. The Board was founded in 1994 to assign ratings to videogames as a proactive step to prevent government regulation of video games. Yoti Ltd. specializes in digital identity, and SuperAwesome Ltd. provides digital solutions related to parental verification requirements online.

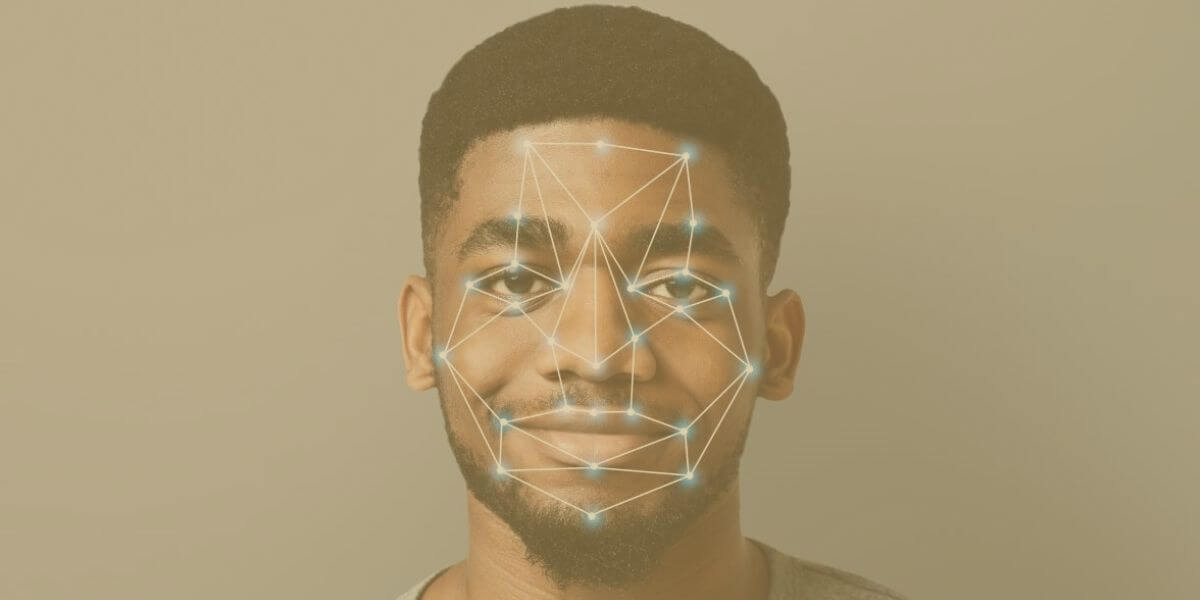

The ESRB and its partners submitted the facial analysis application in June 2023, and the FTC requested public comment in July. The technology would collect images of adults and use an “auto face capture module” to estimate whether the person in the image is actually an adult with the legal right to give parental consent. The ESRB calls this tool Privacy-Protective Facial Age Estimation, used to get Verifiable Parental Consent (VPC).

In a statement to the International Association of Privacy Professionals, the ESRB claimed that the new tool is a much-needed update to outdated methods for getting parental consent online.

Facial capture

The Children’s Online Privacy Protection Act (COPPA) requires companies to get parental consent before collecting any personal information from children under 13 years of age. Companies can’t gather ages, names, addresses, email addresses, or usernames from anyone under 13. These rules mean that for a minor under 13 to register to buy or play video games online, the child would need parents to give consent.

After the FTC’s request for comment, over 350 commenters questioned the safety and privacy of facial analysis, citing the risk of bad actors creating deepfakes using the images.

ESRB clarified shortly after the application in a formal statement that the technology would not be used on children: that the use of facial analysis was strictly to confirm the ages of parents. The ERSB also stated that “images and data used for this process are never stored, used for AI training, used for marketing, or shared with anyone.”

Online safety for kids

Online commentary on the issue was negative. Some commenters misunderstood the technology and assumed it would be used on children. Others pointed out that the technology could easily be abused by any older friend masquerading as a parent.

Privacy protection advocates praised the rejection of the application by the FTC.

Lia Holland of Fight for the Future released a statement on April 2, saying, “Forcing parents and guardians to give up biometric information so that children can play games or connect with peers is misguided and dangerous.”

The advocacy group accused “unscrupulous tech corporations” of having a hidden agenda to seek “so-called solutions that allow them to collect and exploit the data of children under 13.”

The FTC denied the ESRB application in part because there is a study underway to check the accuracy of the technology. The National Institute of Standards and Technology (NIST) is collaborating with software developers to see how accurate the age estimation will be.

The last similar NIST study of facial scanning took place in 2014, practically a generation ago by digital standards. At that time, the age estimation algorithm was only 67% accurate at finding the age of a person within 5 years.

The FTC denied the application in part because the commission wants NIST to finish its study before the application is considered.

“The Commission expects that this report will materially assist the Commission, and the public, in better understanding age verification technologies and the ESRB group’s application,” said the FTC in its March 29 letter declining the application.

Protecting privacy

No, the proposal for Privacy-Protective Facial Age Estimation uses only images of parents. The tool is supposed to verify that the person scanned is an adult.

No, facial recognition technology is intended to identify a specific person. The facial scanning in this case is intended only to verify age, not identity.

Many people do not trust any technology that scans faces and records what is called “biometric” information. Privacy advocates fear that this data will be used for surveillance, control, or abuse by unethical companies or hackers. Biometric information can be used to create deepfakes for pornography or other harmful purposes.

COPPA is the Children’s Online Privacy Protection Act. It forbids companies from collecting personal information from children under 13 without the consent of their parents.

No one can predict with certainty how the data from facial analysis might be used in the future. Many people and organizations are concerned about the misuse of people’s likenesses, invasion of privacy, identity theft, and government surveillance.

No, your typical internet speed and equipment would support facial analysis. The planned technology would use your device camera to scan your face.

No reputable company would attempt to scan your face or use facial recognition technology without informing you. You can be pretty sure that top internet providers will not be secretly collecting your biometrics. The bigger risk would actually be from employers, who sometimes make employees give blanket consents to monitoring of all kinds. This risk is even higher now with the rise of remote work. Just think carefully about your internet activity, especially when you are online at work.

Most of the time, yes. But you will be in a difficult position if your employer insists on fingerprinting ID or facial recognition for building entry, for example.

Understand the use of biometrics

About the author

Congratulations, you qualify for deals on internet plans.

Speak with our specialists to access all local discounts and limited time offers in your area.

[tel]61% of people overpay for their internet.

Are you one of them?

Unlock exclusive offers in your area!

Call now

[tel]Enter zip code